2. Understanding the Concept¶

2.1. Introduction¶

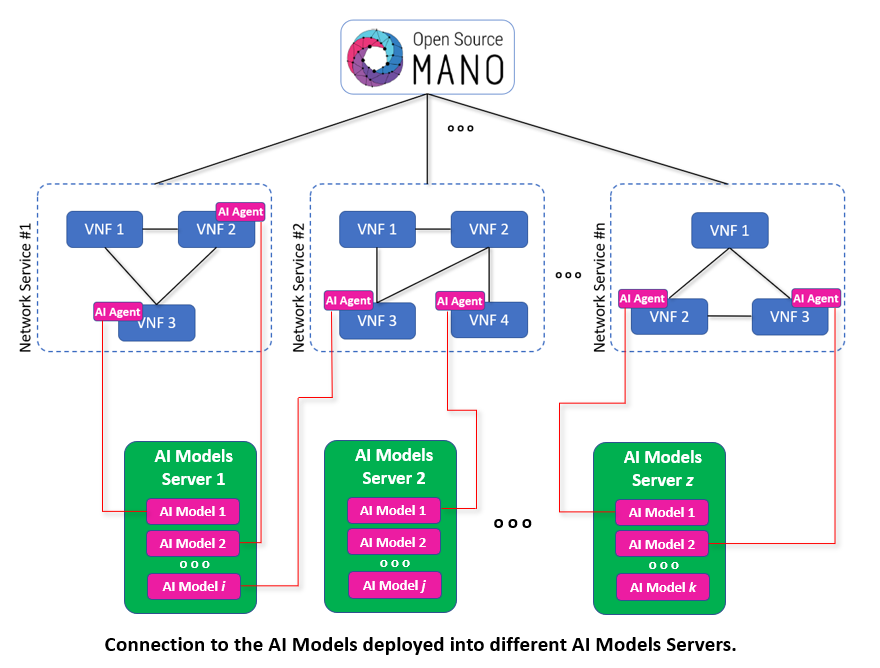

At high-level, the architecture of the AI-Agents for OSM solution is divided into two main functional blocks:

The AI Agents themselves, which can be attached to VNFs to provide them with AI/ML capabilities.

The so-called AI Models Server, where the AI Models (AI algorithms) used by the AI Agents are hosted (see figure below).

As we see, different AI Models Server instances can host different AI models. AI-Agents communicate with these models to perform their AI-related functions. Since some AI Models may have highly demanding computational requirements we consider better to have them hosted on an external server (the AI Models Server) in order to avoid overloading the primary function of the VNFs to which they are associated.

According to this, the AI-Agent component can be seen as a proxy between the VNF and the AI Models. In other words, we can tell “the intelligence” is actually in the AI Models Server, while the AI-Agent is just “the interface” between the VNF and the AI Models in the AI Models Server.

The AI Models Server has been envisioned as an external component to OSM. So, similarly to the VIM, it has to be installed besides OSM to provide the required AI/ML functionalities. However, the AI-Agents for OSM solution does not require the usage of any specific software to implement the AI Models Server functional block. The only condition is that the AI models hosted on the AI Models Server are accessible through a REST API.

This allows an agnostic implementation: the AI Models Server can be based on common Open Source solutions in the field of Artificial Intelligence (TensorFlow Serving, PyTorch, or others), or also on proprietary solutions that you may already own.

Although the AI Agents intelligence is actually hosted in the AI Models Server, the role of the AI-Agents is not minor either: they collect and normalize the data for training the AI models (during the training stages) and pass them to the AI Models Server in the proper format (during the production stages). Also, they interface with OSM in two ways: First, OSM prepares the Execution Environment with the runtime variables required (VNF instance ID) to deploy the AI Agents. And second, the AI Agents request the necessary Service Assurance actions (VNFs scaling or alerting) when necessary.

2.2. What about AI Models?¶

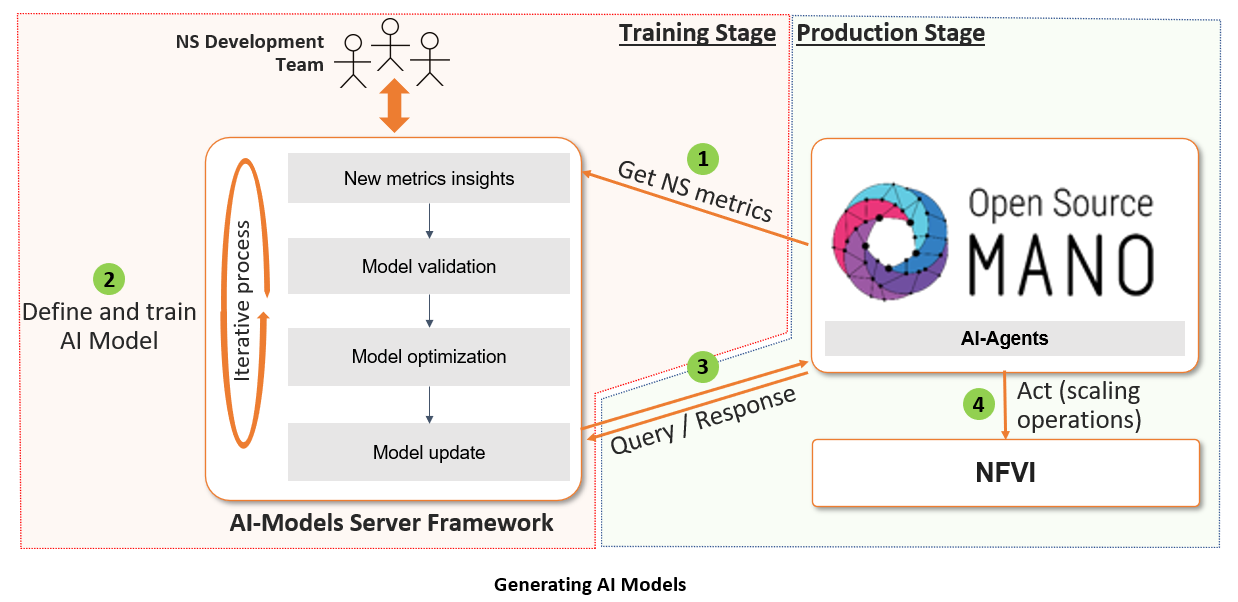

As shown in the figure above, the AI-Agents for OSM solution assumes that there is an AI Model (or a set of them) deployed on the AI Models Server. The AI-Agents attached to the VNFs will query those models to, for example, decide whether or not to apply a scaling action on the VNF to which they are associated. We can imagine the AI Models Server as a regular database hosting a set of data models to support the AI Agent actions. The difference here is that these data models are special models based on artificial intelligence algorithms. Those special models are what we call here AI Models.

Unlike data models in conventional databases, AI Models usually need to be trained. In short, training consists on feeding the models with example data from which they are able to learn and generalise in an algorithmic way (this is what we call Machine Learning). As you probably know in the field of Artificial Intelligence there are different machine learning paradigms: supervised, unsupervised, reinforcement and many others derived from these. We will not go into details about this here (you can see an introduction here); we will just mention that the AI Agents for OSM solution is initially designed to work with supervised and unsupervised models, or any other model in which training and production stages are clearly separated. Other learning models (such as reinforcement) that rely on a continuous update of the deployed model are not yet considered here (although they are also part of our Roadmap). Anyway, we consider this is not a major issue for implementing practical applications, since supervised and unsupervised models already offer a quite wide range of practical use cases.

In any case, what the AI-Agent expects to find in the AI Models Server during the production phase is a valid trained AI Model. However, in order to have this model already trained, it is necessary to train it (of course) using data taken from the production environment (or simulated data in the proper format). The following figure shows this way of working in which the training and production stages are clearly separated:

As we see, during the training stage the AI-Agent is used to get the necessary Network Service data to train the models hosted in the AI Models Server. That data could be collected from the staging or even the production environments (as you probably know, it is important to have access to real training data in order to generate accurate AI models). These data can be stored by the AI Agent in a volume that can be accessed by the AI Models development team during the training stage.

The training stage is of course an iterative offline process implemented by the data engineering team. Using the OSM terminology, the model training stage would be done during Day-1 (steps 1 and 2 in the previous figure).

Once the model is trained and ready for production it can be queried from the AI-Agents. Based on the responses, the AI Agents would apply (or not) the necessary Service Assurance actions (scaling or alerting). This would be done during Day-2 (steps 3 and 4 in the figure).

2.3. Integration in the OSM architecture¶

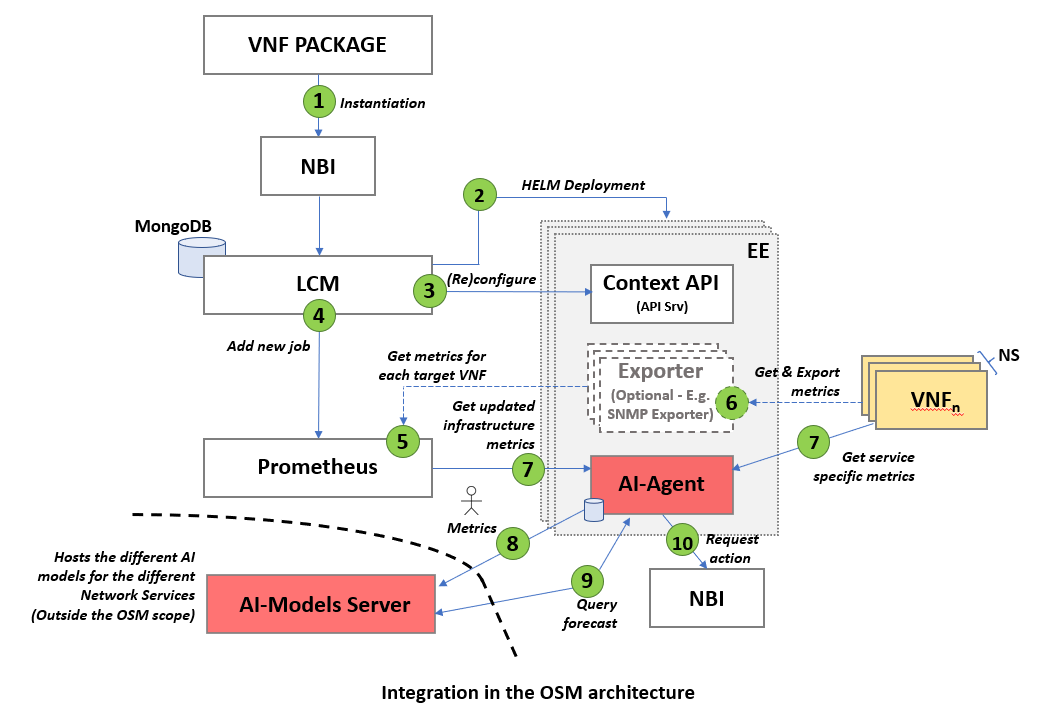

AI Agents are deployed in an analogous way as SNMP exporters are deployed in OSM Release EIGHT (see Advanced VNF Monitoring Section). They are modelled as part of the VNF package through a Helm Chart, so they can be instantiated by OSM through its North-Bound interface (NBI - see figure below):

This approach relies on the dockerized VNF “Execution Environments” (EE) introduced in the same Release EIGHT, which are modeled through the mentioned Helm Charts implementing a lightweight API server able to configure (or reconfigure) the rest of the elements included in the environment in the form of OSM Day-1 and Day-2 primitives (labels from ‘1’ to ‘4’ in the previous figure). The EE is intended to provide a deployment environment with default configurations such as network connectivity with the OSM core elements as well as with the attached VDU. The EE is also in charge of providing the runtime configuration that AI Agents require to correctly locate the instantiated VNF information within OSM.

Once deployed, the AI Agents are able to get metrics from different sources (label ‘7’ in the figure):

Infrastructure metrics from the OSM Prometheus timeseries database.

VNF metrics, that could be accessed in different ways: directly from the VNF (through a specific API) or through a dedicated metrics exporter (e.g., the SNMP exporter mentioned above - labels ‘5’ and ‘6’).

Label ‘8’ represents the access to the training data collected by the AI Agent from the production or the staging environments. These data can be stored in a shared volume to be collected by the AI Models development team to be used during the training stage.

Once the necessary AI Models are trained and deployed on the AI Models Server, the AI-Agents can execute Service Assurance actions by querying the AI Models Server using a REST API (label ‘9’) and by requesting the necessary Service Assurance actions to OSM through its NBI (label ‘10’).